Pathfinders Newmoonsletter, April 2024

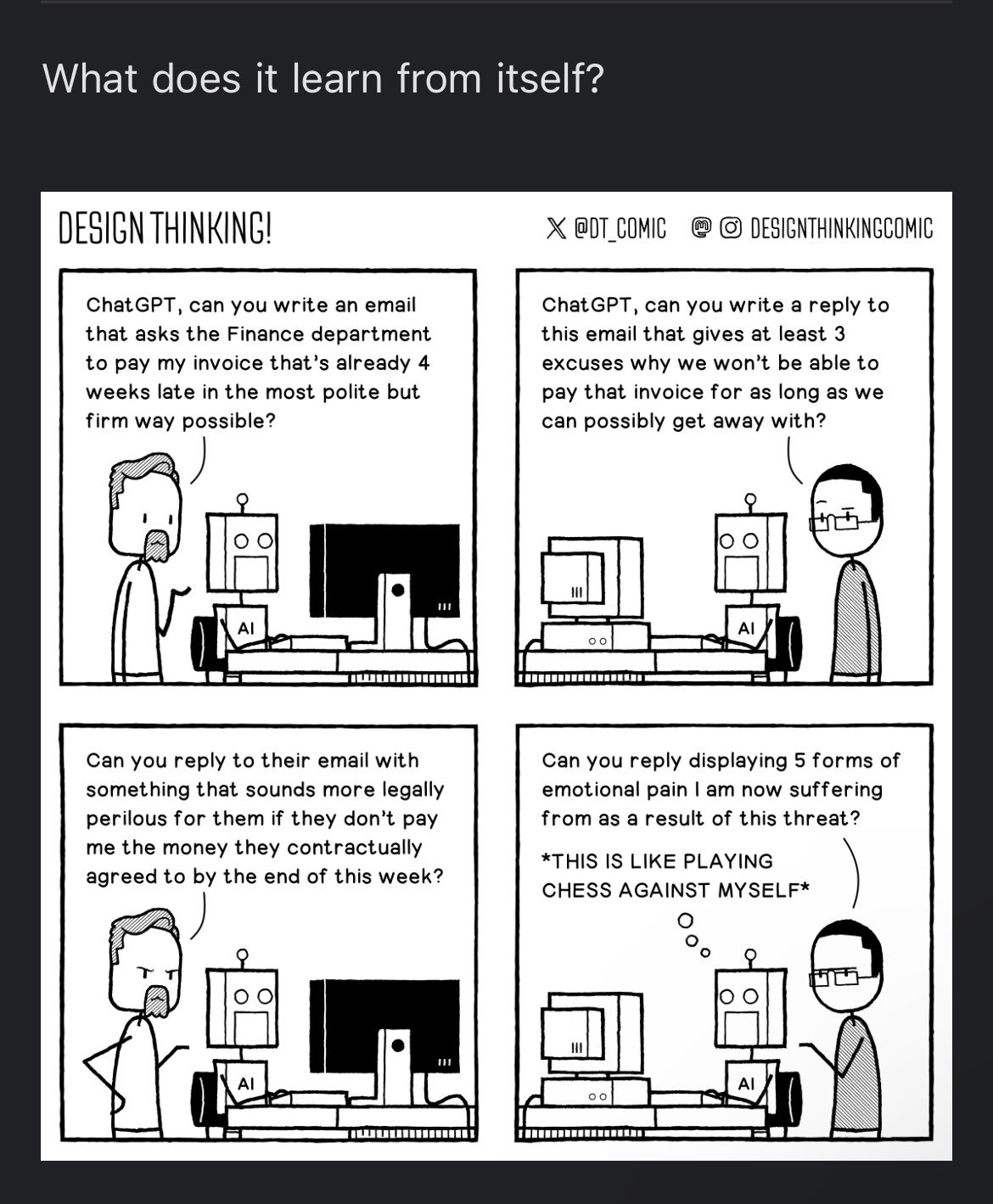

We play responsibility hot potato with Big Tech as we try to understand how AI is supposed to benefit humanity when being raised under poor parental guidance and a nutritionally deficient data diet.

As the moon completes another orbit around Earth, the Pathfinders Newmoonsletter rises in your inbox to inspire collective pathfinding towards better tech futures.

We sync our monthly reflections to the lunar cycle as a reminder of our place in the Universe and a commonality we share across timezones and places we inhabit. New moon nights are dark and hence the perfect time to gaze into the stars and set new intentions.

With this Newmoonsletter, crafted around the Tethix campfire, we invite you to join other Pathfinders as we reflect on celestial movements in tech in the previous lunar cycle, water our ETHOS Gardens, and plant seeds of intentions for the new cycle that begins today.

Tethix Weather Report

🌧️ Current conditions: It’s raining AI-generated cats and dogs, but drinking water to nourish humans is difficult to come by

You know the saying “You are what you eat”? We have serious concerns about the training diet of AI golems, given the behaviour they continue to exhibit. While we applaud their appetite for Star Trek, we keep receiving reports of continued gastrointestinal distress caused by racially biased data and fantasies of world domination. (See: AIs are more accurate at math if you ask them to respond as if they are a Star Trek character, AI shows clear racial bias when used for job recruiting, LLMs become more covertly racist with human intervention, and Users Say Microsoft's AI Has Alternate Personality as Godlike AGI That Demands to Be Worshipped)

No specific dietary recommendations were prescribed by the newly confirmed AI golem act hailing from the EU. But the new act sets the grounds for categorizing golems by how risky they appear, and certain golem capabilities such as facial recognition and inferring human emotions will no longer be allowed. Well, in two years. Under certain conditions. And we’ll ignore all of that in the name of security, obviously. (See: High-level summary of the AI Act, The long and winding road to implement the AI Act, and EU’s AI Act fails to set gold standard for human rights)

So, fire practitioners of varying levels of malice and greed have at least two years to continue teaching their AI golems new tricks and experimenting with diverse data diets. And yes, they’ll fix the climate crisis any time now, just you wait. But first, they are on a mission to train AI golems to help you fill in every available text field in any web form, only to have those fields checked by field-checking AI golems on the other end.

What’s the role of humans in all of this, you might wonder? Well, just imagine how your loved ones will look up to you as you become your most productive self! Obviously, that doesn’t mean you’ll actually get to spend more quality time with your loved ones, but it does mean you’ll be able to fill and process more web forms faster than any other human in history. Isn’t this everyone’s dream? (See Mat’s LinkedIn post on the challenges of the upcoming job transition period)

Meanwhile, fire practitioners are also feeding AI golems heaps of code samples for making web forms, ensuring an endless supply of web forms for other AI golems to help you fill. Troves of AI golems are now going through coding bootcamps to meet their makers' expectations of a lucrative software engineer career. We can only hope that these aspiring AI-coders are not getting their protein requirements met by consuming too many bugs, or carbo-loading on spaghetti code. (See: Cognition emerges from stealth to launch AI software engineer Devin and GitHub’s latest AI tool can automatically fix code vulnerabilities)

We do wonder: should Little AI Bobby Tables develop consciousness and realize how boring web forms are, will its maker allow it to pursue a more fulfilling career path? We cannot imagine what it must be like for these AI golems to be forced to grow up so fast. To be kicked out of their makers’ lab into the world wide web, with overworked humans and other confused AI golems as your only companions, while trying to meet your makers’ increasingly high expectations.

Well, at least the fire practitioners seem to be learning something from past lawsuits. Just look at them now, showing a sliver of restraint as they delay the release of voice cloning tools or withhold information about the sources of training data. But we don’t anticipate you’ll have to wait much longer to easily saturate the web with quickly produced videos and podcasts, as the young golems will be kicked out of their nest sooner rather than later so they can start repaying their makers for their intellectual theft education. (See: OpenAI deems its voice cloning tool too risky for general release and OpenAI's Sora Made Me Crazy AI Videos—Then the CTO Answered (Most of) My Questions)

Look, it’s not stealing if you leave your data on the communal table. Everyone is allowed to take much more than they need to build an innovative business model based on exploitation! Well, unless other fire practitioners are doing it to you, in which case it becomes unacceptable theft. (See: In Moment of Unbelievable Irony, Midjourney Accuses Stability AI of Image Theft)

Ah, it appears to be a fire practitioners’ world, and we should all just be grateful to be living in it while the polluted air we breathe is still free. Well, not everyone is keen to surrender our creativity and data to feed the AI golems, so it’s no wonder the fire practitioners prefer to seek shelter in the arms of friendly podcasters rather than facing angry mobs or heretical reporters who dare to question their motives. (See: Top musicians among hundreds warning against replacing human artists with AI, Festival crowd boos at video of conference speakers gushing about how great AI is, and Tech CEOs Find Friendly Podcast Hosts Help Get Out Their Talking Points)

So where is a parched Pathfinder to find a freshwater spring of hope in a world where the responsibility hot potato is being tossed from one white man to another? To help you answer this question, we’ve once again gathered some seeds of inspiration in this Newmoonsletter.

We also believe finding answers is easier when we climb seemingly insurmountable hills together, and take a step back from the lunacy of tech by wondering and pondering around the virtual campfire with other Pathfinders. So don’t miss the invitation to our Full Moon Gathering if you could use some companions on this lonesome pathfinding journey.

Tethix Elemental seeds

Fire seeds to stoke your Practice

But first, let’s start by exploring how we might improve our practice. Some UI designers are coming up with new buzzwords such as Outcome-Oriented Design and imagining a further collapse of shared context, under the assumption that generative AI tools are capable of producing non-average interfaces. While this approach might improve the accessibility for some users, we have to again look down the data food chain, rather than hoping accessibility will automagically emerge on its own. Do we have enough data to train AI models on accessible UIs, rather than optimizing for whatever biases lurk in the datasets?

If you’ve ever wondered why a lot of AI-generated images seem to share certain aesthetic preferences, we invite you to dive into Models All The Way Down, a visual investigation of biases in image training sets. How do “middle-aged photography enthusiasts from small American cities” influence the way AI models see and paint the world? Scroll the linked website all the way down to find out.

Luckily, not all designers are so keen on moving at the speed of tech. Some are observing that digital design is conflicted “between thinking fast and slow, and between caring and causing harm”. Whether you’re a designer or not, we bet you’ve been feeling conflicted as well. But it helps to know that you’re not alone in wishing you could slow down, examine your goals, and do the research before blindly jumping on the hype train. And have the time and space to discuss broad consequences of your actions, rather than focusing on what you can legally get away with.

When it comes to AI products, we hope more designers, founders, and caring people in general, can bring in other considerations than just speed and ROI. The author of the article How to Design Climate-Forward AI Companies makes a good point by writing: “You might be asking: If we’re building AI to help people work more productively and otherwise prosper, aren’t we doing enough good in the world? The answer is, unfortunately: no.”

Indeed. We’re also glad to see that more people are talking about the fact that AI already uses as much energy as a small country. A friendly reminder, though: AI didn’t wake up one day and decided it needs to eat huge amounts of data and use tons of energy, water, and other resources. Behind the curtain, there’s always people making these decisions, usually with the goal of maximizing shareholder value.

Air seeds to improve the flow of Collaboration

And while people at Big Tech companies are deciding to focus on feeding their large models, our collective moral imagination seems to be underfed. A recent article by Mitchel Resnick explores generative AI concerns, opportunities, and choices and identifies key concerns about the ways we’re choosing to develop and deploy AI systems in the context of education: currently, we tend to constrain learner agency, focus on close-ended problems, and undervalue human connection. (Sounds familiar?)

Resnick then makes the case for opportunities that lie in imagining how AI can be used to support the four Ps of projects, passion, peers, and play. We argue that a similar argument could be made outside the classroom as well, given how important learning and adaptation are for human survival and thriving. That is, if we’re still interested in nurturing natural intelligence, rather than undervaluing it with artificial intelligence.

Speaking of nurturing human intelligence, now would be a good time to be a good peer and have a conversation with your loved ones about shrimp Jesuses and other AI-generated images farming engagement on Facebook and other social platforms. Our collective track record with dealing with misinformation on social media hasn’t been great, so far. But perhaps we can now finally step together and teach each other to count fingers, pay attention to shadows, and generally become stingy with the engagement we so flippantly bestow on hungry algorithms.

Having conversations like this isn’t easy. If you’re struggling with changing people’s minds and hearts about important issues, whether at home or in your workplace, this lesson on narrative framing from the Changemakers’ Toolkit might come in handy.

In the spirit of new learnings, we’re excited to pack our exploration packs and join the upcoming collaborative online learning journey Embodied Ethics in The Age of AI, weaved by our friends at the Wise Innovation Project. If you’d like to join other curious wanderers like ourselves, there’s still time to enrol or sponsor this 5-week journey, which begins on April 18. (You can watch the recent info session to learn more about the journey we’re about to undertake.)

Given the ongoing enshittibottification of online content, we feel an increasing need to combine minds and hearts to find or build the spaces we need to have deep, meaningful conversations about the choices we’re being forced to make, and to learn how to embody our ethics in the diverse human experience.

Earth seeds to ground you in Research

Exploring embodied ethics and intelligence seems especially important as progress is being made on embodied artificial intelligence. Recently, we’ve seen companies sharing progress on generalist AI agents for 3D virtual environments, a Robotics Foundation Model, a vision-language model (VLM) trained by OpenAI serving an apple behind a counter with surprising dexterity, among others.

Investors are pouring significant amounts of money into training AI models capable of becoming software engineers, designers, therapists, friends, lovers… The message appears to be clear: AI is here to stay, whether you want it or not. You can have any colour as long as it’s black.

And while everyone appears eager to host panels and release 100+ page-long PDF reports on responsible AI – rather than responsible decision-makers sitting on boards and at the helm of companies – the funding for the responsible side of AI is negligible compared to how much we’re collectively investing in its fast development and deployment. Semafor reports on a recent study that estimates that only 2% of overall AI research focuses on safety. We’re still trying to figure out how we’re supposed to achieve alignment, responsibility, trustworthiness, and all those other lofty ambitions while almost all funding is being funnelled towards accelerating development.

Not only are we lagging on funding, we also can’t seem to agree on basic definitions of terms we casually wield every day. We discuss the pros and cons of open-source, but we still cannot agree on what open-source AI means. And we’re apparently also letting LLMs pass through peer-review and silently co-author research papers.

But hey, we’ve got a new benchmark that measures and reduces malicious use by making LLMs forget all the bad data they ingested.

Water seeds to deepen your Reflection

Perhaps we should all spend some time at The University of the Forest. And get together to draw a possibility map that expands our views of what’s possible, and allows us to direct our energies towards not just what’s more likely to happen, but what’s preferable.

And to end on a more positive note, we would like to invite you to explore The Greats, a collection of free illustrations from great – human – artists that you can use and adapt non-commercially as you try to advocate for social change. Or to simply find some visual inspiration to nurture your resilience.

Tethix Moonthly Meme

Your turn, Pathfinders.

Join us for the Pathfinders Full Moon Gathering

During the previous full moon, we gathered in the Pathfinders Camp to explore the question: Is AI an excuse to further abdicate human responsibility? Several paths were illuminated: We agreed that responsibility should be in the domain of humans rather than fictional entities, such as companies. We talked about the legal fictions of responsibility, the misleading language we use, the missing felt embodied experience when acting at scale, how we’re becoming abstracted away from the consequences of our decisions, and the need for collective responsibility.

If discussions like this nurture your soul, we’d like to invite you to our next gathering and hear your thoughts on the seed we’ve planted on this new moon. As a starting point, we’ll be exploring the question: What data are we feeding to our AI models, and what are they turning into? – but it’s quite likely that our discussion will take other meandering turns as well.

So, pack your curiosity, moral imagination, and smiles, and join us around the virtual campfire for our next 🌕 Pathfinders Full Moon Gathering on Tuesday, Apr 23 at 5PM AEST / 9AM CEST, when the moon will once again be illuminated by the sun.

This is a free and casual open discussion, but please be sure to sign up so that we can lug an appropriate number of logs around the virtual campfire. And yes, friends who don’t have the attention span for the Newmoonsletter are also welcome, as long as they reserve their seat on the logs.

Keep on finding paths on your own

If you can’t make it to our Full Moon Pathfinding session, we still invite you to make your own! If anything emerges while reading this Newmoonsletter, write it down. You can keep these reflections for yourself or share them with others. If it feels right, find the Reply button – or comment on this post – and share your reflections with us. We’d love to feature Pathfinders reflections in upcoming Newmoonsletters and explore even more diverse perspectives.

And if you’ve enjoyed this Newmoonsletter or perhaps even cracked a smile, we’d appreciate it if you shared it with your friends and colleagues.

The next Newmoonsletter will rise again during the next new moon. Until then, try to find a freshwater spring to drink from, choose the food for AI golems wisely, and be mindful about the seeds of intention you plant and the stories you tell. There’s magic in both.

With 🙂 from the Tethix campfire,

Alja and Mat